|

|

| Riga 1: |

Riga 1: |

| | [[Category:Research]] | | [[Category:Research]] |

| | | | |

| − | <center>

| + | = LOREN = |

| − | <h1> LOREN </h1>

| + | == LOw-delay congestion control for REal-time applications over the iNternet == |

| − | <h2> LOw-delay congestion control for REal-time applications over the iNternet </h2>

| |

| − | </center>

| |

| | | | |

| | [[File:LOGO_FinanziatoUnioneEuropea.png|500px]][[File:LOGO_UniversitaRicerca.jpg|500px]] | | [[File:LOGO_FinanziatoUnioneEuropea.png|500px]][[File:LOGO_UniversitaRicerca.jpg|500px]] |

Revisione 18:37, 8 Feb 2024

LOREN

LOw-delay congestion control for REal-time applications over the iNternet

Description of the Project

Congestion control in packet networks has been historically focused on avoiding network overload while providing full network utilization. The cornerstone result was the well-known TCP congestion and flow control algorithm proposed by Van Jacobson.

The goal of this project is to propose an algorithm that not only avoids network congestion while providing high utilization but also controls the network delay. Controlling the delay is of the utmost importance since the Internet is not only aimed at delay insensitive data traffic, but also at real-time communications (i.e. video chat, live streaming, virtual reality) and real-time control (i.e. autonomous driving, telerobotics, telesurgery).

Starting from the state of the art, the first goal will be to design the fundamental functions of the algorithm using a rigorous theoretical approach based on control theory so that algorithm properties such as stability and efficiency can be mathematically proven. The algorithm will be designed by taking into account both well-behaving wired connections and the new and more challenging 5G networks that have shown the need for a more responsive congestion control algorithm. In fact, recent literature has shown that the 5G link introduces fast on-off connectivity periods that, due to the high 5G bandwidth and the TCP cyclic probing phases, lead to high retransmission rates when using classic TCP (i.e., NewReno, Cubic, BBR).

The algorithm will be designed in accordance with the end-to-end principle. However, feedback and control functions implemented or envisaged in current and evolving networks will be also considered, namely: Software Defined Networks (SDN) functionalities, that can be used for traffic engineering, e.g., to steer aggregate traffic flows according to load balancing principles; local cross-layer optimization in 5G/6G context, with highly variable links and smart scheduling algorithms in the lower layers; in-band network telemetry functionalities.

The second goal will be to investigate the trade-off of implementing the end-to-end algorithms at the application layer over UDP or at the transport layer (TCP over IP) depending on target applications. Implementation at the application layer has the advantage that different algorithms can be designed and tuned for specific classes of applications having different requirements of delays and responsiveness.

The third goal will be to carry out an extensive experimental evaluation in two scenarios: (a) production networks, by leveraging an established collaboration with researchers at Google [Car17], aiming at contributing to the development of the WebRTC standard used for videoconferencing; (b) autonomous driving where mobile robots need to communicate with the infrastructure (f.i. a tracking system) or between them by exchanging data and audio/video channels.

Objectives and Methodologies

Over the past two decades, the Internet has evolved from being a data communication platform for the transport of data to a platform for real-time communications such as audio/video conferencing, streaming video, WebTV, online games, and real-time control.

Real-time flows, unlike data streams, pose stringent requirements both in terms of bitrate and maximum tolerable delay. The standard TCP congestion control has been designed to deliver greedy data streams with the goal of avoiding congestion while providing full link utilization. In fact, the TCP congestion control continuously probes for network available bandwidth through periodic cycles during which network queues are first filled and then drained. This behavior is not suitable for delivering delay-sensitive traffic. For this reason, video-conferencing applications implement their own congestion control and (optionally) data integrity protection functions at the application layer over the UDP, which does not implement congestion control and retransmissions.

The overall goal of this project is to design a congestion control algorithm for real-time flows as a key enabling technology for the real-time Internet.

On the application side, a specific focus will be devoted to 5G radio access. In fact, the large bandwidth promised by new radio access technologies (e.g., mmWave) and the volatility of this bandwidth poses a hard challenge to end-to-end congestion control [Zha19].

End-to-end low-delay congestion control

Differently, from what has been proposed in the literature, the congestion control algorithm will be rigorously designed based on feedback control theory in order to guarantee properties such as stability, robustness, and speed of convergence.

The proposed algorithms will be tested in laboratory as well as in production networks. In particular, we will access the testing capabilities provided by Google which allow experimenting with new congestion control algorithms in the browsers.

This activity is made possible by leveraging our consolidated research collaboration with Google which allows us to experiment in the wild on a large population of Google Chrome users. Moreover, we will test the proposed algorithms in a 5G testbed in collaboration with prof. Alay Ozgu at the Department of Informatics at the University of Oslo where she leads the Gemini IoT Center and is involved in building a 5G and IoT testbed through 5GENESIS EU project.

We will consider different control architectures where the congestion control algorithm is placed at the sender, or at the receiver or hybrid, i.e. in part at the sender and in part at the receiver.

For control algorithms closed over the Internet and for which it is not possible to avoid relevant delayed feedback, robust controllers with modified Smith predictor will be considered [Mas99],[DeC11].

In the case of real-time (i.e. delay-sensitive) flows, the key issue resides in the fact that not only congestion avoidance but also control of network queue lengths are required to limit delays. The idea is to exploit packet delay measurements for feedback.

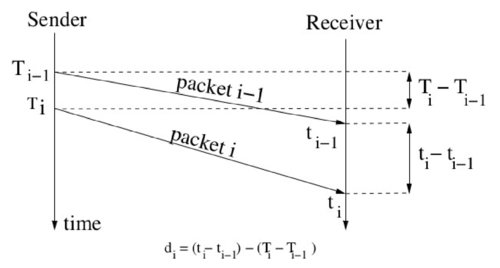

We will consider the one-way delay variation d_i and the packet Inter-Arrival Time (IAT) at the receiver, IAT_i = t_i – t_{i-1}, that can be measured as shown in the figure below, where T_i represents the time at which the i-th packet has been sent and t_i is the time at which the packet has been received (T_i is timestamped in the packet). It is very important to note that this end-to-end measurement can be easily executed and represents a solid starting point to establish a feedback-based control of connection delays.

An increase of the one-way delay variation, or jitter, indicates that queueing delay along the forward communication path is increasing due to queues being filled which in turn are a sign of the onset of network congestion.

One-way delay variation measurements are promising for queuing control since they avoid the “latecomer effect” [Car10] and sensitivity to the reverse-path traffic [Mas06]. Measuring IAT variations at the sender is an extremely quick method to estimate the bandwidth available at the bottleneck and also the bottleneck capacity.

The measurement of delay variations experienced by packets traveling from a sender to a receiver is challenging due to the fact that one-way delay variations are affected by unknown packets processing times and, in the case of wireless links, by lower layer retransmissions that can be modeled as noise. Statistical characterization of the noise will be carried out in different scenarios such as wired and mobile networks. Then, based on such statistical characterization, we will investigate and compare approaches to filter out the network noise such as a Kalman filter. In the case of data center networks, it has been shown that delay gradient measurements can be done in network interface cards [Mit15].

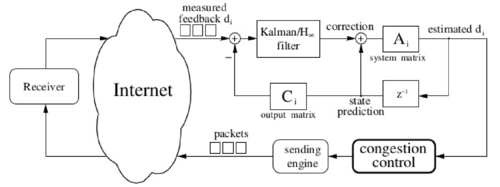

The Figure below shows a schematic of the filter and of the congestion control algorithm.

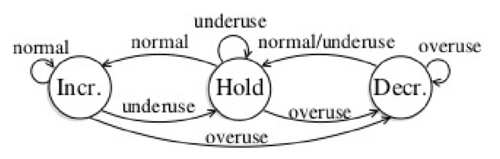

The estimated one-way delay variation will be used to feed a classic additive increase multiplicative decrease congestion control algorithm. When the estimated queueing delay gradient will reach a threshold, the congestion control algorithm will enter the multiplicative decrease phase or an adaptive decrease phase using an end-to-end bandwidth estimate [Gri04]. This will be a major change wrt the current implementation of the Google Congestion Control (GCC) [Car17] where the congestion control is driven by the state machine at the receiver shown in the figure below, within the complete architecture.

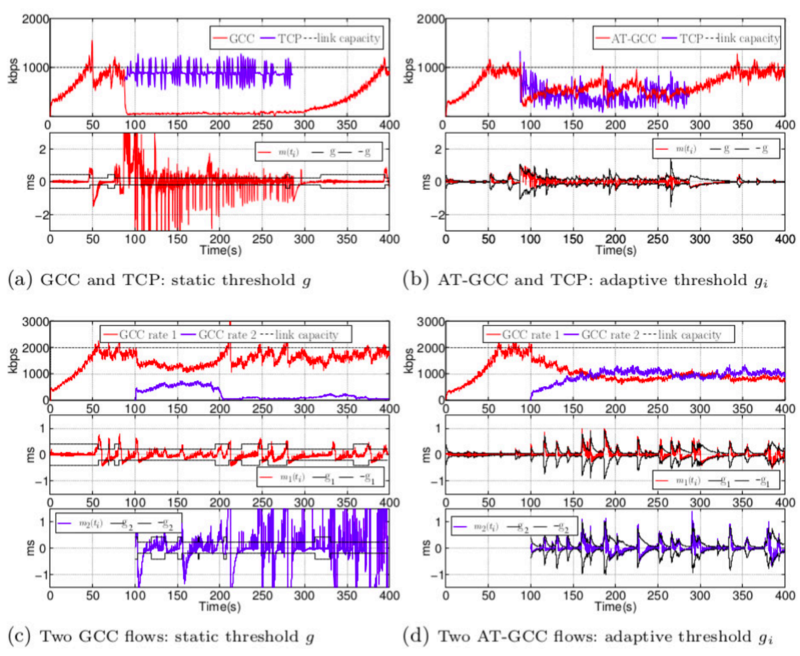

In detail, a GCC flow is controlled at the sender by a Multiplicative Increase Multiplicative Decrease (MIMD) loss-based algorithm and at the receiver by a delay-based algorithm that measures and compares the one-way queuing time variation m_i with a threshold g_i.

The sending rate is set as the minimum of the rates computed by these controllers which are respectively A_s and A_r. At the receiver, the remote rate controller is the finite state machine shown in the figure above in which the state is changed by the signal s_i produced by the over-use detector that is fed by the output of the arrival-time filter, which estimates m_i. The FSM is used to compute A_r . The REMB Processing sends REMB messages containing A_r to the Sender.

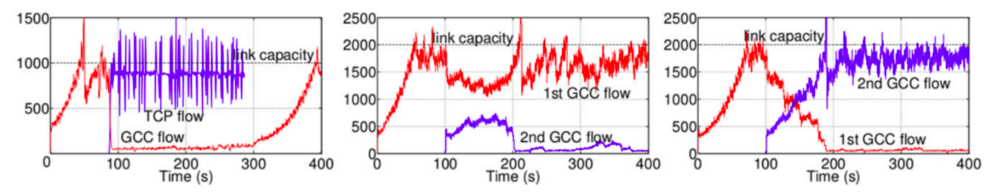

The figure below shows that a GCC flow using a constant threshold gets starved when a concurrent TCP flow starts. We have found that starvation also occurs when two coexisting GCC flows share a bottleneck [Pav13].

To overcome these issues, we have proposed the following adaptive threshold g_i to be used by the over-use detector [Car17, Hol15]:

Figure below shows how rate flows dynamics along with one-way delay variations are nicely set after the introduction of the adaptive threshold.

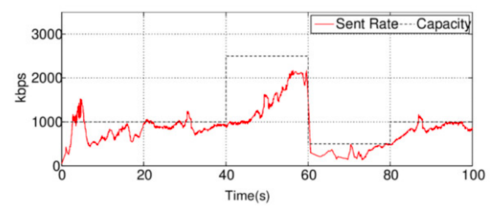

However, this algorithm still exhibits several issues. One issue is that the sending rate tracks a time-varying channel capacity with a large settling time. As an example, the figure below shows that the sending rate takes more than 20 seconds to track the link capacity after that it is increased at t=40s.

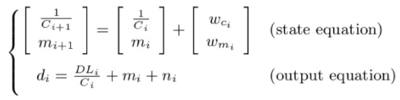

Moreover, the following state space and output equations describing the one-way delay variations lack observability in some conditions:

where:

- C_i is the link capacity;

- m_i is the one-way queuing delay variation;

- d_i is the the total one-way delay variation;

- DL_i is the variation of two consecutive video frames size.

The complex non-linear interacting control equations governing a GCC flow make it impossible to get a rigorous mathematical proof of properties such as stability and rate of convergence. In view of this issue, an important goal will be to design a congestion control algorithm so that a mathematically tractable controlled system is obtained and key properties, such as stability, convergence rate, and observability can be proven.

The proposed theoretical framework could be leveraged by researchers to design and analyze congestion control algorithms for real-time flows. It will also pave the way for new studies focusing on the interplay between congestion control algorithms placed at the end-points and control functionalities placed in the networks such as AQM and, more interestingly, in SDN switches. In particular, the interplay between end-to-end delay-based congestion control algorithms and dynamic bandwidth allocation algorithms in SDN switches (f.i., implementing network slicing) is a fundamental research issue that has not been explored.

Moreover, the research carried out in this project may contribute to the design of methods for robust control of time-delay systems and to the design of techniques for estimating the one-way delay variation experienced by packets traveling over the Internet.

Cooperation between e2e congestion control and in-network control functionalities

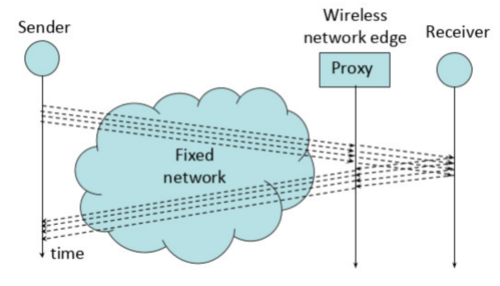

The second line of development for the proposed algorithms is aimed at the specific context of broadband cellular networks. The main idea we intend to investigate is related to introducing a proxy at the edge of the cellular network to help exploit the variable capacity of the wireless link, without inducing wild variations of TCP congestion window, hence instability of the connection and large delay variations, which has been pointed at as a potential issue of current congestion control approach in relation with 5G networks[Zha19].

We assume that cross-layer functions can be devised at the edge so that the wireless channel capacity resulting from the complex interplay of radio propagation, modulation and coding, multiple access, and scheduling can be monitored and possibly predicted.

The proxy at the edge plays the role of an adaptive flow controller that modulates the advertised window signaled back to the remote TCP data source so as to maintain low queueing delay at the connection bottleneck while utilizing the available capacity efficiently.

A scheme of the envisaged control architecture is depicted in the following figure:

The main idea is to move the receiver-side algorithms developed in the first part of the project and described in the first part of this section to the proxy.

Split TCP approaches have been already proposed and investigated and experimented [Kop02][LeW16]. The novelty we intend to introduce is as follows: (i) focusing on low delay traffic, hence maintaining low queueing delay at the bottleneck; (ii) introducing a proxy-side estimation algorithm for jitter and IAT; (iii) exploiting the advertised window to drive congestion control acting from the proxy.

The proxy-side algorithm workflow can be concisely stated as follows:

- Phase 1 (initialization): estimate the pipe capacity and current utilization of the pipe.

- Phase 2 (probing): increase the advertised window and check whether the sender matches it.

- Phase 3 (adaptation): tracking of available capacity.

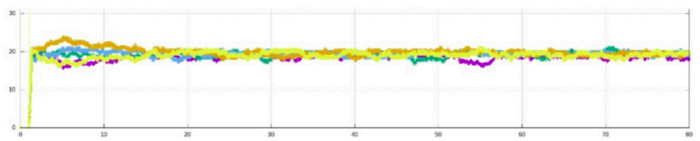

Preliminary results of the time evolution of the throughput maintained by four TCP connections sharing the same bottleneck are

shown in the following figure. It is to be stressed that the almost full and fair utilization of the bottleneck bandwidth is achieved while

maintaining the bottleneck buffer essentially empty.

We plan to apply the rigorous control-theoretical framework developed in the first part of this project to this proxy-based approach and then extend the performance evaluation, both by means of ns-3 based simulations and experiments, to several interesting scenarios (e.g., different RTT values, asymmetric traffic, competition with legacy congestion control flows).

Finally, we plan to evaluate the potential of in-band network telemetry (INT) functionality to support low-delay congestion control in the same framework outlined above. INT could provide detailed additional information on the congestion status of the connection path, thus helping to refine the control accuracy besides estimates of IAT and variation of IAT at the receiver. The effectiveness of INT, when properly supported by intermediate routers, has been investigated in data center environments in [LiY19].

Performance evaluation methodology

A set of requirements will be defined by taking into account the IETF RMCAT WG recommendations [RFC8836] such as low delays, intra- and inter-protocol fairness.

The proposed congestion control algorithm will be implemented in the open-source Chromium web browser. The performance evaluation will be carried out in two phases: at first, the control will be experimented in a laboratory testbed. By using tools to emulate a Wide Area Network, repeatable experiments will be executed. In the second phase, the algorithm will be tested on the real Internet browser and accessing a 5G station. In setting up test scenarios we will also refer to [RFC8869].

The experiments in the controlled testbed will consider the scenarios defined at IETF in [RFC8867].

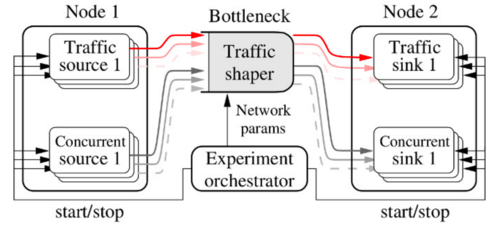

The figure below shows an example of the architecture of the envisaged controlled testbed to be used in the project.

In this phase, we will also compare the proposed congestion control algorithm with other solutions proposed at the IETF RMCAT WG such as, f.i., NADA by Cisco [Zhu13] and SCREAM by Ericsson [Joh14]. Recent proposals will also be considered, [Aru18], [DONG18].

In the second phase, thanks to our active collaboration with Google, we will be able to experiment with the proposed algorithm on a large set of Google Chrome users, who employ WebRTC to establish video-conferencing sessions. This will produce a large experimental dataset that we aim at releasing.

Moreover, we will test the proposed algorithm in the 5Genensis EU testbed [1] and in the UiO’s Sustainable Immersive Networking Lab (SIN-Lab) both located at the University of Oslo under the responsibility of prof. Alay Ozgu. 5Genensis is a 5G testbed and SIN-Lab is a playground for immersive networking research that focuses on providing a true sensation of presence in a remote location through haptic interaction. It consists of 5G equipment, multimedia equipment, and haptics equipment. In terms of 5G/B5G, it offers two powerful SDRs (USRP N310) to run a 5G/B5G RAN and UE with open source software such as Open Air Interface.

Furthermore, it makes available user equipment that supports multi-connectivity of 4G, 5G and Wifi, along with a set of 5G phones.

For the multimedia equipment, SIN-Lab consists of state of the art cameras and LIDARs, Intel RealSense Tracking Camera (T265), Velodyne LIDAR (VLP-16), Intel RealSense LIDAR (L515), Azure Kinect and several headsets for VR (HTC Vive, Oculus Rift, several Oculus Quest2) and AR (Microsoft Hololens, Project Northstar). For the haptics equipment, SIN-Lab has several wearable devices such as StretchSense Haptic Gloves (with motion capture), Prime X Haptic VR (including gloves with haptic feedback), Forte Data Gloves, Ultrahaptics Stratos (in-air touch feedback) and BHaptics Full Body Suit (with 40 motors providing haptic feedback on the human torso).

Finally, the proposed algorithm will be experimented in autonomous navigation scenarios using the Mobile Robotics and Embedded Control (MOBIREC) laboratory at Politecnico di Bari where several mobile robots are available and a VICON tracking system composed of 8 Vero cameras is available. In this scenario, the VICON tracking system will accurately and continuously estimate the position of the mobile robots present in an arena measuring approximately 40 square meters and the proposed algorithm will be used to stream data to the robots in real-time. The estimated pose will be used by the robots to form a closed-loop control system for autonomous navigation.